Web authors created a Chatbot that really can produce seconds longer percussive instruments from message provokes and sometimes even reshape a beeped or murmured melodic line into all the other components, equivalent towards how structures including such DALL-E create photos from documented instructions. The software is called MusicLM, so while you can’t innovate with it yourself, the corporation had already submitted a number of measurements generated with that too.

The instances were also outstanding. There have been 30-second small bits about what listen like improvised solos produced from couple of sentences characterizations of style, ambience, or even precise instruments, in addition to five-minute-long parts produced through one or two comments like “rhythmic electronica.” Possibly my favorite is indeed a display of “story missions,” where the design is provided a screenplay to transmogrify among alerts.

It may not be suitable for everybody, although I could completely see a mortal designing all these. Illustrations of the results that system generates when inquired to create 10-second snippets of equipment such as the cello or maracas (the other indication is a case where the structure does quite a relatively poor task), eight-second snippets of a different category, tunes which would fit a prison break, or even what such a newbie piano player would tone like versus an innovative one are also all showcased on the domain level. It also contains meanings for aspects like “futuristic team” as well as “collapsible black metal.”

MusicLM could even imitate human vocal harmonies, and although it appears to always get the sound but instead total background music of speakers accurate, there is an distinct characteristic to people. They feel blurry or staticky, seems to be the smartest method I can characterize it. A certain effectiveness isn’t quite as noticeable inside the preceding sentence, and although I actually think this example does.

EXAMPLE

electronic song played in a videogame (0:00-0:15)

meditation song played next to a river (0:15-0:30)

fire (0:30-0:45)

fireworks (0:45-0:60)

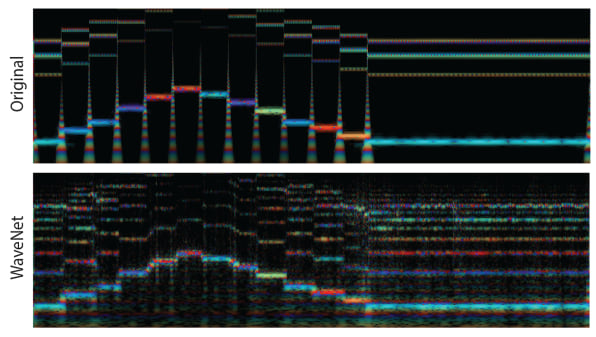

There has been a strong history of Intelligence songs that spans decades; processes have indeed been attributed with songwriting pop tunes, replicating Bach effectively than just a human can maybe in the 1990s, as well as incorporating musical concerts. One current edition employs the artificially intelligent information extraction motor Stable Diffusion to transform message instructions in and out of spectrum analyzer, after which they are transformed into tunes. In accordance with the document, MusicLM performance when compared other methods in terms of “reliability as well as compliance to the headline,” along with the capacity to take in recording as well as duplicate the melodic line.